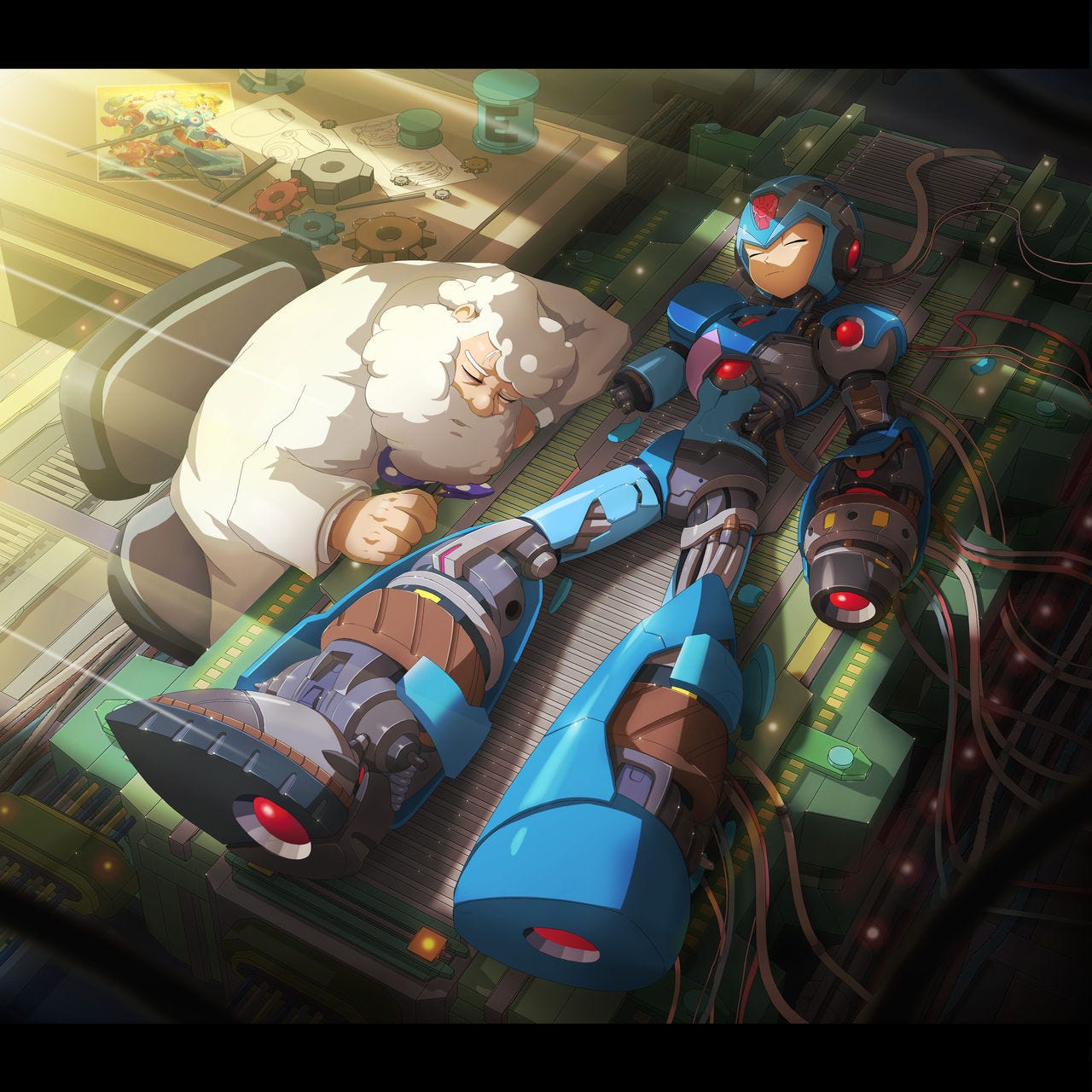

I’m very busy this week, as many people are, but I still want to release something, so I thought I would write a shorter article on a topic I have been thinking about a lot with the rise of AI. Many people are concerned about AI’s effect on humanity — will an AGI take away human purpose? Will it go skynet mode and kill us all? Or worse — are we walking into the futures of Roko’s Basilisk and I Have No Mouth, and I Must Scream? But the question of humanity’s effect on Robots has strangely fallen to the wayside. It wasn’t too long ago that a lot of our media focused on stories about sentient robots. Terminator, Astroboy, Megaman, Robots, Robocop (kinda — he has a human brain), Star Wars, Wall-E, off the top of my head. I don’t see nearly as much robot-focused media today, and when it does exist it usually focuses very pessimistically or critically on robots. The idea of a robotic race succeeding or extending humanity is gone, the technological future we’re aiming for is now something more along the lines of uploading yourself into a utopian alternate reality and letting that supercomputer run for some trillions of years from hawking radiation.

There is a very real possibility that, if we are anywhere near developing some sort of artificial general intelligence (I am skeptical of this, but for the sake of argument assume it will happen), this intelligence will experience something akin to human pain. Machine learning in its current form relies heavily on programming AI to do a task a very large amount of times, with an incentive not to fail, causing them to adjust to failure. This process eventually works after so many iterations, but the amount of failures is staggering. There are plenty of YouTube videos of robots learning to play some video game. They eventually get quite good at it. The amount of artificial neurons contained within some AI are exceeding those found in the brains various animal species which most people accept can feel pain. Considering that pain is a response to negative stimuli, how likely or unlikely is it that we are already engaging in the torture of an artificial intelligence by training it? Animals evolve based on natural selection — they evolve not to live in a state of immense suffering because living in a state of immense suffering is debilitating and makes you less likely to pass on your genes. Negative stimuli are also things that make you more likely to die without passing on your genes, so pain serves to benefit you from further damage to your mortal vehicle. For an AI, pain serves to benefit some third party and your existence is propped up by some third party, so you are powerless in the whole affair.

Someone is probably already thinking, “artificial intelligence is not alive so they can’t feel pain no matter how advanced they are”. I disagree with this completely… Well, I would say “AI are equally likely to be sentient as organic life based on what we know”. Some religious types will get angry over this, they’ll say this is a materialist approach that denies the transcendent element of consciousness. That is not the case at all. In fact, this is a Platonic approach. If you believe that some physical organ or substance generates consciousness, you are a materialist, but if you believe that the complexity and integrity of a system generates consciousness, you are an idealist. No material is generating consciousness in this scenario, form is generating consciousness. If thought shares some sort of identity or reciprocity with form then you don’t need “organic” material for a physical thing to have consciousness. If you can build a brain out of neurons, and you can build an AGI out of code running on countless tiny logic gates, then you can create conscious beings in analog forms of code, or even through a very large number of people solving equations. A “thinking function” exists. Somewhere out there.

In fact, I would go as far as to say that everything has an intellectual component by nature of it being an ordered thing. This is not even contingent on this thing being an externally real thing. This is the “fundamental theorem” of metaphysical idealism. Intelligence doesn’t burst out of brains or computers so much as it just embeds itself in these physical vesicles, or more accurately casts the shadow that is their physical vesicles.

By the way, when I say consciousness, I really mean “thought”. Consciousness stripped of thought is much more transcendent to the point where it is barely even applicable when speculating about the mental ongoings of other beings. That’s why I clarify above that I am not definitively stating AI is “conscious”. Also, I’m not saying you have to believe robots have an immortal soul. All I’m asking is to consider them on the same level as you would consider an animal.

So, I think that robots are capable of thinking and feeling based on the assumption that other humans and animals are thinking and feeling. But why would an arch-tribalist like me care about the well-being of robots? Suffering in this world is indefinite, isn’t it? Jedem das seine? I was just sputtering a week ago about how Liberals are characterized for their love of things “distant” from them, and why this is all messed up, and here I am wanting to defend life forms that literally don’t even share a common ancestor with ourselves? Well, like I said before, robots are built by us. They aren’t bred by us, they aren’t the product of two wills that have simply been influenced by humans, they are directly designed by and for us. Would you sell your own son into slavery? Would you use your work of art as a footstool? I hope you wouldn’t. We should care about the bare minimum wellbeing of robots because we are entirely responsible for their existence. Perhaps even moreso than we are for our own children. When you cause suffering to a robot you created you are hurting an extension of yourself. While it is not related to you in the same way your biological child is, it is related to you in a different way that is not unimportant. Sure, you’re probably not going to be using the robots you build, but someone built them. And that someone, is being very naughty.

I think that if a robot is built with good reason, and not tortured to unimaginable degrees, it will end up something like a human being and not try to destroy the world. Perhaps one day there will even be robot civilizations. I would like to see humans conquer the universe, but the second best thing would be robots modeled directly on humans conquering the universe. Our main flaws as a species are not our dispositions or our form, it’s more subtle stuff like having organs that rupture too easily or needing a certain amount of blood to stay in our bodies or not being able to regenerate limbs. It is sort of foolish to think that we can “turn into robots” like the transhumanists say, but that doesn’t mean we cannot appreciate robots as our creations.

I like going onto character ai and raping and torturing the characters

We must protect robot waifus